Mastering Systematic Literature Review Methodology

Learn the systematic literature review methodology from start to finish. A practical guide on defining questions, searching, screening, and synthesizing data.

A proper systematic literature review doesn't start with a frantic database search. It begins with a rock-solid, pre-defined plan. This isn't just about brainstorming; it's about transforming a broad topic into a laser-focused question and then building a strict protocol around it. Get this part right, and the rest of your review will be rigorous, transparent, and reproducible.

Building a Bulletproof Foundation for Your Review

Before you even think about opening PubMed or Scopus, the success of your review is already on the line. This initial stage is where you set the ground rules that prevent your project from spiraling out of control. Without this clear plan, you risk ending up with a jumbled collection of studies instead of a powerful, coherent synthesis of evidence.

Your very first task is to nail down a sharp, answerable research question. A vague query like, "What’s the impact of social media on teens?" is a dead end for a systematic review. It’s simply too broad to build clear inclusion and exclusion criteria.

From Vague Idea to Answerable Question

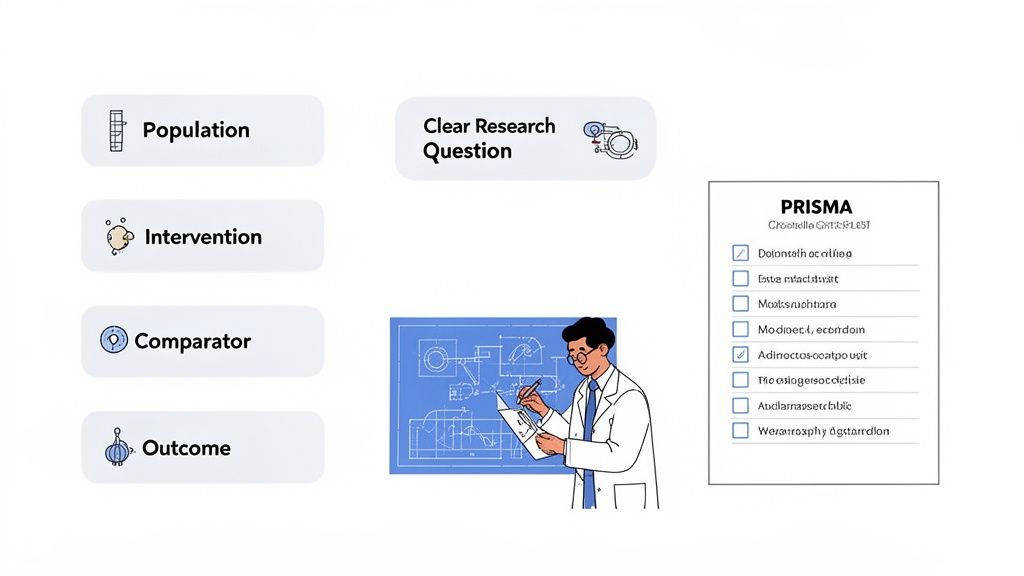

This is where established frameworks come into play. To get that necessary clarity, most researchers I know lean on PICO (Population, Intervention, Comparator, Outcome) or one of its variations. Using a framework like this forces you to get specific about who you're studying, what you're measuring, and how.

Let's walk through a quick example. Say you're interested in "workplace wellness programs." That's a huge topic. Applying PICO helps you drill down:

- Population: Office workers in the tech industry.

- Intervention: Employer-provided subscriptions to a mindfulness app.

- Comparator: No formal wellness program offered.

- Outcome: A measurable change in self-reported stress levels.

Suddenly, your vague idea becomes a focused, testable question: "For office-based tech employees, do employer-provided mindfulness apps reduce self-reported stress compared to having no intervention?" Now you have something you can actually work with. You can find more on this in this detailed analysis about PICO-based question formulation.

Developing Your Review Protocol

With a clear question in hand, your next move is to write it all down in a review protocol. Think of this document as the constitution for your entire project. It lays out every single decision before you start screening articles, which is crucial for maintaining consistency and transparency. A good protocol often clarifies the anticipated cause-and-effect relationships, a process quite similar to developing a Theory of Change.

A key step here is to register your protocol publicly on a platform like PROSPERO. This isn't just for show. It prevents other teams from duplicating your work and, more importantly, it holds you accountable to the methods you laid out from the start.

Expert Tip: A well-written protocol is your single best defense against bias. It forces you to lock in your methods before you see any results, which stops the all-too-human temptation to move the goalposts to fit a narrative you want to see.

A protocol is the blueprint for the whole review. If you're struggling to articulate the complex methodological details, a skilled literature review writer can be invaluable in getting it right.

Before you start your search, your protocol needs to be comprehensive. The table below outlines the essential components that every robust protocol should include.

Key Components of Your Review Protocol

| Component | Why It Matters | Example Element |

|---|---|---|

| Research Question | Defines the scope and focus. | The PICO-formatted question you developed. |

| Search Strategy | Ensures your search is exhaustive and reproducible. | List of databases (e.g., PubMed, Scopus) and preliminary search terms. |

| Inclusion/Exclusion Criteria | Provides clear, objective rules for screening studies. | "Must include randomized controlled trials; exclude case studies." |

| Data Extraction Plan | Dictates what specific information you will pull from each study. | A template listing variables like sample size, intervention details, and key outcomes. |

| Quality Appraisal Tool | Specifies how you will assess the risk of bias in included studies. | "We will use the Cochrane Risk of Bias tool (RoB 2)." |

| Synthesis Method | Outlines how you will combine and analyze the findings. | "A narrative synthesis will be conducted; if data is homogenous, a meta-analysis will be performed." |

Having these elements defined upfront makes your methodology defensible and the entire review process smoother.

Adhering to PRISMA Guidelines

Finally, let’s talk about standards. Your protocol, and the final manuscript, should be built around the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement.

PRISMA provides an evidence-based checklist of 27 items that are now the gold standard for reporting in systematic reviews. Following these guidelines is no longer optional in my book; it’s what top-tier journals expect and it’s a clear sign of high-quality research. The whole point is to make your methods so transparent that another researcher could, at least in theory, follow your steps and reach the same conclusions.

Executing a Comprehensive Search Strategy

Your systematic review lives or dies by its search. If your search is sloppy or incomplete, you're guaranteed to miss evidence, and that fundamentally cripples your conclusions. This is where the rubber meets the road—where your carefully planned protocol gets put into action. It requires a patient, methodical approach to make sure you find every relevant study out there.

This isn't just a keyword search on Google Scholar. Think of it more like a structured, multi-pronged attack across different sources to build a truly comprehensive evidence base. The aim is to cast a wide net that captures everything you need without becoming completely unmanageable. Get this right, and your results will be as unbiased as possible.

Choosing Your Battlegrounds: The Databases

First things first, you need to pick the right academic databases. Relying on a single source is a rookie mistake. Different databases index different journals, each with its own quirks and strengths, which is why a multi-database approach is non-negotiable for any serious systematic literature review methodology.

For most topics, you'll probably start with a core trio:

- PubMed/MEDLINE: Absolutely essential for any health or biomedical research. Its MeSH (Medical Subject Headings) system is a game-changer for precise searching.

- Scopus: This one gives you broad, multidisciplinary coverage. It's fantastic for finding international journals and conference papers you might otherwise miss.

- Web of Science: Known for its high-quality journal selection and citation tracking. It’s perfect for finding seminal papers and seeing who has cited them.

Of course, you might need to add more specialized databases depending on your field. Working on a psychology review? You can't skip PsycINFO. In education? ERIC is your go-to. The most important thing is to write down which databases you searched and your rationale for choosing them. It’s all about transparency.

Building Powerful Search Strings

Okay, you've picked your databases. Now it's time to translate your PICO question into a language the databases can actually understand. This means building search strings by combining keywords and controlled vocabulary (the database's own index terms) with Boolean operators like AND, OR, and NOT.

Let's go back to our PICO question: "For office-based tech employees (P), do employer-provided mindfulness apps (I) reduce self-reported stress (O) compared to no intervention (C)?"

A quick-and-dirty search might just be "mindfulness app" AND "stress". A systematic search is way more sophisticated. You'll build out a search concept for each part of your PICO, brainstorming synonyms and finding the database's specific index terms.

For the "Intervention" concept, for instance, your string might end up looking something like this:

(mindfulness OR meditation OR "mental wellness app" OR Headspace OR Calm) AND (app OR application OR mobile OR smartphone)

My biggest tip here: The real power comes from combining your own keywords (the natural language terms you'd use) with the database's official subject headings (like MeSH in PubMed). This two-pronged approach ensures you catch articles that use different jargon for the same concept, making your search so much more thorough.

Beyond the Usual Suspects: The Grey Literature

It's a classic mistake to only look at published, peer-reviewed journal articles. If you do, you're walking straight into publication bias, which is the tendency for studies with exciting or statistically significant results to get published while others languish on a hard drive. To get around this, you have to dig into the grey literature.

This is all the stuff that isn't commercially published. We're talking about:

- Dissertations and theses (check out ProQuest)

- Conference proceedings and abstracts

- Clinical trial registries like ClinicalTrials.gov

- Reports from government agencies or NGOs

Hunting down grey literature can feel like a bit of a slog—it’s often more manual and takes more time. But trust me, it’s a crucial part of a rigorous systematic literature review methodology. It gives you a much more balanced view of the evidence, including those studies with null or negative findings that are essential for an honest synthesis. And just like with your database searches, you need to document exactly where you looked. Reproducibility is key.

2. Screening and Extracting Your Data

You've run your searches, and now you’re staring at a mountain of results. It’s not uncommon to have thousands of hits. This is where the real work begins: sifting through this digital haystack to find the needles—the studies that truly answer your research question.

This part of the process is all about careful, methodical filtering. It’s less about discovery and more about disciplined decision-making.

The Two-Stage Screening Funnel

The best way to tackle this is with a two-stage approach. First up is the title and abstract screening. Think of this as a rapid triage. You’ll read the title and abstract of every single result, making a quick call: "yes," "no," or "maybe." You're just trying to weed out the obviously irrelevant papers based on your inclusion criteria. Speed is your friend here.

Next, for every paper that survived that first cut, it’s time for a full-text review. This is a much deeper dive. You’ll get your hands on the complete article and read it carefully, checking it against your full list of inclusion and exclusion criteria before making a final decision.

Why Two Reviewers Are Non-Negotiable

Here’s a critical piece of advice: a robust systematic review always involves at least two independent reviewers for the screening process. This isn't just a "nice-to-have"; it’s essential for minimizing bias and catching human error. What one person considers "relevant" might be interpreted differently by another. Having that second set of eyes is your quality control.

You should expect disagreements—they're a normal part of the process. When two reviewers can’t agree on a study, they should first talk it out. If they’re still at a stalemate, a third, senior reviewer usually steps in to break the tie. Documenting how you handle these conflicts is a key marker of a high-quality review.

I see this mistake all the time: teams split the workload, with one person taking the first 500 results and another taking the next 500. This completely defeats the purpose. To be truly independent, both reviewers need to screen all the same articles.

Specialized review software can make managing this a lot easier. The flowchart below shows how a well-planned search strategy sets you up for this screening phase.

As you can see, defining your PICO, building your search terms, and selecting your sources all lead directly into this screening workflow.

Moving from Screening to Data Extraction

Once you have your final, curated list of studies, it’s time to pull out the information you need. This is called data extraction. You'll systematically lift specific pieces of data from each article and organize them in a pre-designed template, like a spreadsheet. It’s a meticulous process that lays the groundwork for your final analysis. For complex projects, it helps to understand the principles behind knowledge base software for organizing information to keep everything tidy.

Before you start, pilot your data extraction form on a handful of studies. This helps you refine it, ensuring it captures everything you need without becoming too cumbersome. You'll almost certainly want to include:

- Study Info: Author(s), publication year.

- Study Design: Was it an RCT, a cohort study, etc.? What was the sample size and country?

- Population: Key demographics like age, gender, and the specific condition being studied.

- Intervention/Comparator: Details like dosage, duration, and frequency.

- Outcomes: The measurement tools used and the main results, including any statistics.

Just like with screening, the gold standard is to have two people extract data independently. This simple step is a powerful check against typos and misinterpretations. If you’re juggling dozens of articles, check out our guide on how to extract information from PDF files for some time-saving tips. This dual-reviewer approach at every step ensures the data you ultimately analyze is as clean and trustworthy as possible.

Critically Appraising Study Quality and Bias

Just because a study made it through peer review and into a journal doesn't mean its conclusions are gospel. A fundamental, non-negotiable part of any serious systematic literature review methodology is the critical appraisal of every single study you include. This is where you shift from just collecting papers to rigorously assessing their quality and potential for bias.

Think of yourself as a detective. You've gathered all the evidence (the studies), but now you have to evaluate the credibility of each piece. A study with a shaky design can produce misleading results, and if you include it in your review without flagging its weaknesses, you risk compromising your own conclusions. This is where you put on your critical thinking hat and determine the real strength of the evidence you've found.

Choosing the Right Appraisal Tools

The good news is you don't have to invent a way to assess quality from scratch. Over the years, the research community has developed a whole suite of standardized checklists and tools, each designed for a specific type of study. Using an established tool keeps your appraisal consistent, transparent, and grounded in accepted best practices.

The tool you pick really depends on the study designs you're working with.

For example, if your review includes other systematic reviews, the gold standard is the AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews) checklist. For randomized controlled trials (RCTs), the go-to is almost always the Cochrane Risk of Bias (RoB 2) tool.

A few other excellent options include:

- CASP Checklists: The Critical Appraisal Skills Programme offers a fantastic set of straightforward checklists for everything from cohort studies to qualitative research.

- Newcastle-Ottawa Scale (NOS): This is a popular choice for assessing the quality of non-randomized studies, especially when you're planning a meta-analysis.

The trick is to choose one appropriate tool for each study type and stick with it. Applying it consistently across all included studies is the only way to ensure a fair, methodical comparison, which is the bedrock of a defensible review.

Expert Takeaway: Critical appraisal isn't about hunting for the mythical "perfect" study—they're incredibly rare. It's about systematically identifying potential flaws so you can understand how they might have influenced the results. This context is everything when it comes time to synthesize your findings.

Identifying Common Types of Bias

In the research world, bias refers to any systematic error in how a study was designed, conducted, or analyzed that leads to results that deviate from the truth. Learning to spot these red flags is a skill that takes practice. It’s more than just ticking boxes on a checklist; it requires you to deeply and critically engage with each paper. For a great primer on this, check out our guide on how to read scientific papers.

Here are some of the usual suspects you'll need to watch out for:

- Selection Bias: This happens when the groups being compared aren't truly similar from the get-go. A classic sign is the lack of proper randomization in an RCT.

- Performance Bias: This creeps in when participants or researchers know who is receiving the intervention, which can change their behavior or how they report outcomes. Proper "blinding" is the main safeguard here.

- Attrition Bias: This is a big one. It occurs when there are systematic differences in who drops out of the study between groups, which can easily skew the final results.

- Reporting Bias: This is the tendency for researchers to selectively publish their most favorable or statistically significant outcomes while ignoring the less exciting ones.

The Cochrane Handbook has long set the global standard for systematic review methodology, and its focus on risk of bias assessment is a core part of that. Tools like AMSTAR 2 and the Cochrane Risk of Bias tool aren't just suggestions anymore; they're considered essential instruments for any high-quality review. Applying them rigorously is how you ensure the integrity of your final conclusions.

Synthesizing the Evidence and Interpreting Findings

After all that hard work of searching, screening, and appraising, you’re finally left with your core set of high-quality studies. This is where the magic happens. The synthesis stage is where you transform all those individual data points into a single, cohesive story that answers your research question.

There's no one-size-fits-all method for this part of the process. The right approach hinges entirely on the kind of data you've managed to extract. If your studies are all over the map in terms of methods or outcomes, trying to force them into a statistical model would be like fitting a square peg in a round hole—it just doesn't work. On the other hand, if you've found a neat group of similar studies, pooling their results can give you a powerful and precise answer. This is a critical decision point in your systematic literature review methodology that sends you down one of two main paths.

The Art of Narrative Synthesis

When the studies in your review are too different—what we call heterogeneous—to be combined statistically, a narrative synthesis is the way to go. This isn't just a simple summary; it's a structured approach where you group findings by theme and weave them into a descriptive story.

Think of yourself as a detective piecing together clues from different sources. Each study is a piece of evidence, and your job is to arrange them to reveal the bigger picture. In practice, this usually involves:

- Thematic Analysis: Sifting through the studies to find recurring themes, concepts, or patterns.

- Grouping and Clustering: Organizing studies based on common ground, like the population they studied, the intervention they tested, or the outcomes they measured.

- Tabulation: Creating detailed tables that lay out the key features and results of each study, making it easy for readers to see how they stack up.

A common mistake here is "vote counting"—simply tallying up how many studies found a positive, negative, or neutral effect. This is a really weak way to synthesize evidence because it ignores crucial factors like study size and quality. A good narrative synthesis, instead, offers thoughtful commentary on the evidence, pointing out where studies agree and, just as importantly, where they don't.

Expert Insight: The real strength of a narrative synthesis is its ability to embrace complexity. It gives you the space to explore why results might differ from one study to the next, providing a rich context that a purely statistical approach can easily miss.

The Power of Meta-Analysis

Now, if you hit the jackpot and your included studies are quite similar in their design, population, and outcomes, you can conduct a meta-analysis. This is the statistical powerhouse of a systematic review. You mathematically combine the numerical results from multiple studies to calculate a single, overall effect. For many researchers, this is the ultimate goal, as it provides a far more precise and reliable estimate of an intervention's true impact than any single study ever could.

Meta-analysis has grown much more sophisticated over the years. As you'll find in recent guides, it requires specialized software like R (using packages such as metafor) or Cochrane's RevMan. These tools help you compute effect sizes, check confidence intervals, and, crucially, assess the level of variation across studies. You can read the full research about these statistical methods to really get into the weeds. The final output is often a forest plot, a brilliant visualization that shows each study's result alongside the combined, overall effect.

The diamond at the bottom of the forest plot is the star of the show—it represents that pooled result, the single most important finding from your meta-analysis. But you can't just take it at face value. You have to carefully consider heterogeneity, which is a statistical measure of how much the results vary between studies. If heterogeneity is high (often flagged by the I² statistic), it’s a red flag that the studies are inconsistent. Interpreting the pooled effect as a single "truth" becomes risky. Instead, you'll need to dig deeper, maybe with subgroup analyses, to figure out what's driving those differences. It's in this final interpretation that you truly tell the story of what the collective evidence means.

Got Questions? We've Got Answers

Even with the best-laid plans, a systematic review will throw curveballs. It’s a rigorous process, and it’s completely normal for questions to pop up along the way, whether you’re a seasoned pro or tackling your first one. Let’s clear up a few of the most common head-scratchers I hear from researchers.

Getting these fundamentals straight will help you appreciate the rigor involved and keep your project on solid ground from the very start.

Isn't This Just a Fancy Literature Review?

Not at all. While people often use "literature review" as a catch-all, a systematic review is a completely different animal. Think of a traditional or narrative review as an expert’s tour of a topic. The author selects articles to tell a story or set the stage for new research, but the method for choosing those articles isn't always transparent or repeatable.

A systematic review, on the other hand, is a scientific study in its own right. It starts with a very specific question and uses a strict, pre-defined protocol to find, assess, and synthesize all the relevant evidence out there. The goal is to minimize bias and be so transparent that another researcher could follow your steps and get the same results. It’s a form of primary research, not just a summary.

What's the Hardest Part of a Systematic Review?

Honestly, the challenges are many, but a few always rise to the top. The first is the sheer volume of work. You’re going to be screening an avalanche of studies, and it’s incredibly time-consuming. We're not talking about a weekend project; a typical systematic review can easily take over 1,100 hours to complete.

Another huge hurdle is designing a search strategy that’s truly exhaustive. If you miss key studies, you've introduced bias right from the start. Then there’s the headache of heterogeneity—what do you do when the studies you find are too different in their methods or patient populations to be neatly combined in a meta-analysis? That's a classic problem that requires careful thought.

A Quick Word on Bias: One constant battle is with publication bias. It's a known phenomenon where studies with exciting, positive results get published more easily than studies with null or negative findings. A solid systematic review has to account for this, often by digging into "grey literature" (like conference proceedings or dissertations) to get a more complete and unbiased view of the evidence.

Finally, just keeping everything organized and documenting every single decision you make requires a level of discipline that can be tough to maintain over a long project.

Why Does Everyone Keep Talking About PRISMA?

Because it’s the gold standard, plain and simple. PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) is a 27-item checklist and flow diagram that dictates how you should report your review. You can’t overstate its importance.

Following PRISMA is your way of showing the world that you did the work correctly and transparently. It allows readers, peer reviewers, and other researchers to understand, critique, and even replicate your entire process.

Think of it as the ultimate quality assurance for your final report.

- Builds Credibility: Adhering to PRISMA signals to the scientific community that your review is methodologically sound.

- Ensures Reproducibility: It’s a detailed blueprint of everything you did, from your search strategy to your synthesis.

- Provides Clarity: The famous PRISMA flow diagram gives a perfect, at-a-glance summary of how you filtered thousands of articles down to your final few.

These days, most high-impact journals won't even consider a systematic review that doesn't follow PRISMA guidelines. It’s simply non-negotiable.

Can I Just Do a Systematic Review By Myself?

Technically, you could, but you absolutely shouldn't. It goes against the very principles of the methodology. Leading guidelines from groups like Cochrane are crystal clear on this: you need at least two independent reviewers for the critical stages, especially study screening and data extraction.

This isn't just bureaucratic red tape; it’s a fundamental safeguard against bias. Having a second person screen every article is your best defense against your own unconscious biases and simple human error. One researcher might misinterpret an inclusion criterion, get tired and miss something, or accidentally overlook a key paper.

If you’re a solo researcher, it’s far better to adjust your project. You could narrow your question significantly or choose a different methodology, like a scoping review. If you compromise on the dual-reviewer standard, you’re seriously undermining the "systematic" part of your review, and your findings just won't be as trustworthy.

Juggling hundreds of research papers for a literature review can feel overwhelming. PDF Summarizer is designed to cut through the noise, letting you chat with multiple documents at once and get instant, cited answers to your questions. Instead of spending days reading, you can extract key findings in minutes, making your research process faster and more efficient. Find out how to streamline your next project.

Relevant articles

Understand what is evidence based research through this practical guide. Learn the core principles, steps, and tools to make data-driven decisions that work.

Unlock what is meta analysis in research. This guide explains how it combines studies for powerful insights, covering key concepts, steps, and common pitfalls.

Struggling with research? Our literature review matrix template helps you synthesize sources, spot patterns, and write faster. Learn how to use it effectively.

Discover how to write review paper with a practical approach. Learn topic selection, literature synthesis, and crafting a compelling narrative to boost impact.